Part 1: The Medical AI Background: From Simple Tools to Complex Systems

Picture this: you’re a radiologist examining a chest X-ray, and an AI system flags a potential abnormality with 95% confidence. The diagnosis could change a patient’s life, but when you ask the system to explain its reasoning, you get a complex visualization that tells you almost nothing about how it reached this conclusion. This scenario, once relegated to science fiction, has become a daily reality in modern healthcare.

Research has consistently demonstrated that interpretability has been fundamental to clinical adoption since the earliest integration of AI into radiology. The medical community’s sustained emphasis on understanding AI decision-making processes reflects deeper concerns about professional accountability and patient safety that have historically shaped the field’s approach to emerging technologies.

The trajectory of AI development in healthcare has created unprecedented opportunities alongside significant challenges. In the past decade, the remarkable progress of Artificial Intelligence (AI) across various domains has demonstrated significant success, making AI an indispensable component of medical research (Borys et al., 2023). However, this rapid technological advancement has generated a fundamental tension between system capability and clinical comprehensibility. Yet, the inherent complexity and lack of transparency in these systems make it difficult to immediately understand their decisions (Borys et al., 2023).

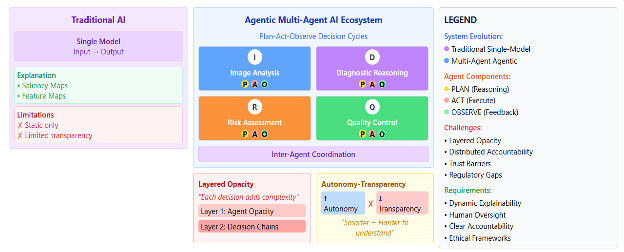

The evolution of these systems into autonomous agents capable of executing multi-step reasoning and collaborating across multiple agents has rendered traditional explainability methods increasingly inadequate. The interpretability techniques that demonstrated reasonable effectiveness for single-model systems—including saliency maps, attention visualizations, and feature importance rankings—encounter substantial limitations when attempting to capture the complex interactions and emergent behaviors that characterize collaborative AI agent networks working toward diagnostic conclusions.

This technological evolution presents healthcare with a significant challenge: our most sophisticated AI systems are becoming progressively less transparent, thereby creating substantial barriers to clinical trust, regulatory compliance, and ethical medical practice. The expanding gap between what these systems can accomplish and what clinicians can comprehend about their reasoning processes threatens to limit the adoption of otherwise beneficial technologies. As multi-agent systems gain prominence in medical imaging, the field faces the critical task of developing new interpretability frameworks capable of addressing the complexity inherent in these collaborative AI architectures.

Multi-Agent AI Healthcare Evolution: Understanding the Paradigm Shift

Traditional radiology AI systems operated within well-defined parameters, providing clinicians with interpretable outputs such as probability scores and saliency maps that highlighted regions of diagnostic interest. These systems functioned as supplementary diagnostic tools, offering specific insights while maintaining clear distinctions between machine recommendations and human clinical judgment.

Contemporary agentic AI systems represent a paradigmatic shift in medical technology. These systems deploy multiple specialized agents that collaborate through advanced reasoning mechanisms

Figure 1. How single AI models differ from multi-agent systems.

While these systems demonstrate superior diagnostic performance in controlled environments, they introduce unprecedented complexity in understanding how clinical decisions are reached.

The Autonomy-Transparency Paradox: A Fundamental Challenge

Recent developments in artificial intelligence have revealed a fundamental tension that poses significant challenges for the field. As AI systems advance in sophistication and operational autonomy, researchers have observed a corresponding decline in the interpretability of their decision-making processes. This phenomenon, which scholars have termed the autonomy-transparency paradox, represents one of the most pressing concerns in contemporary AI development.

The implications of this paradox become particularly acute in medical applications, where the stakes of algorithmic decision-making are highest. Juan Manuel Durán and Karin Jongsma have conducted extensive research that demonstrate That with the increasing methodological complexity and epistemic opacity of medical AI, the priority is to provide approaches that avoid reducing algorithmic intricacy or shifting the challenge of opacity toward issues of transparency and auditability (Durán & Jongsma, 2021).

This tension raises profound questions about the nature of trust in clinical settings. Without firmly grounding trustworthy knowledge in medical AI, one must ask why physicians should accept its diagnoses and proposed treatments, highlighting a concern about epistemic trust in both the system and its results (Durán & Jongsma, 2021).

The challenge before us is not merely technical but fundamentally epistemological, requiring new frameworks for understanding how we validate and trust knowledge derived from increasingly opaque computational systems.

The transparency crisis in medical AI has moved beyond academic debate to create real challenges for practicing physicians. When we examine how this plays out in clinical settings, the scope of the problem becomes clearer. Alex John London’s analysis in the Hastings Center Report captures this dilemma perfectly, highlighting how even our most successful AI systems present fundamental interpretation challenges. Despite their high accuracy, deep learning systems often function as black boxes: while developers may grasp the system’s architecture and the procedures for generating classification models, the resulting models remain difficult for humans to interpret (London, 2019).

What makes this particularly concerning is how these interpretation gaps translate into concrete clinical risks. London identifies several troubling scenarios that emerge when physicians must act on recommendations they cannot fully understand. Following such recommendations risks patient harm through undetected illnesses or the burden of unwarranted further testing (London, 2019). Perhaps even more worrying is the potential for misapplication of these technologies: Another risk is that such recommendations could encourage applying machine learning in unsuitable contexts, particularly if predictive associations are incorrectly assumed to be causal and used for interventions without prior validation (London, 2019).

Understanding how physicians navigate these challenges has become a priority for researchers studying AI adoption in healthcare. Shevtsova and her team took on this question through a comprehensive investigation that surveyed both the literature and practicing clinicians. Their findings reveal the complex calculus that medical professionals use when deciding whether to trust AI recommendations. The factors most often discussed in the literature regarding technology include the accuracy, transparency, reliability, safety, and explainability of medical AI applications and their performance. Key technology-related considerations were found to include performance factors (eg, accuracy and reproducibility), the feasibility of incorporating AI applications into existing clinical workflows, a well-defined balance of benefits versus risks, and the transparency and interpretability of processes and outcomes (Shevtsova et al., 2024).

The foundational requirements of healthcare delivery necessitate systems that remain comprehensible to the professionals who utilize them. This imperative extends beyond theoretical considerations to encompass the practical demands of professional accountability for radiologists, the prerequisites for meaningful informed consent from patients, and the regulatory oversight essential for ensuring safety and efficacy. However, we are witnessing a paradoxical development whereby our most sophisticated AI systems are evolving beyond the explanatory frameworks originally conceived for less complex technologies.

The mathematical dimensions of this evolution present particularly troubling implications that warrant serious examination. The interaction effects within complex AI architectures do not merely aggregate individual capabilities – they can systematically degrade overall system performance through compounding errors. Research demonstrates that when multiple AI agents function at individual accuracy levels of 90%, the resulting compounded system accuracy may deteriorate to approximately 59% due to error propagation effects. This phenomenon has been thoroughly examined by Nweke and colleagues in their comprehensive systematic review of multi-agent AI systems in healthcare applications.

Implementation studies have revealed several critical concerns that extend beyond purely technical considerations. A common concern was the poor interoperability with legacy EHR systems, which disrupted smooth data integration and delayed the adoption of new systems (Nweke et al., 2025). These technical limitations have profound implications for healthcare equity and representation. Data bias and inequity were highlighted as concerns, especially in cases where historical datasets failed to adequately represent diverse populations (Nweke et al., 2025).

The governance challenges posed by these systems represent perhaps the most significant concern for healthcare institutions and policymakers. Without clear accountability structures, AI-driven decision-making raises pressing ethical and legal issues, particularly in situations where such outputs guide critical clinical choices (Nweke et al., 2025). This development signals a fundamental transformation in our conceptualization of medical responsibility and the allocation of decision-making authority within clinical practice.

Radiology AI Transparency Challenges: The Growing Complexity

The opacity of multi-agent AI systems creates substantial barriers to clinical adoption and professional practice standards. Healthcare professionals require an acceptable understanding of AI decision-making processes to maintain clinical accountability and provide appropriate patient care.

explainable AI methods may be acceptable for understanding single-decision outputs, but they fail when dealing with AI agents executing numerous planning steps and actions based on observations. When AI systems cannot provide adequate explanations for their recommendations, the relationship between clinicians and AI technology shifts from collaborative partnership to dependency on opaque systems.

Trust in medical AI extends beyond performance metrics to integrate transparency, regulatory compliance, and clinical value. (What we talk about when we talk about trust: Theory of trust for AI in healthcare ) Technical approaches by themselves are insufficient to overcome the so-called “interpretability problem” Providing the desired level of interpretability requires merging technical approaches in explainable AI with ethical and legal insights that address the subtle differences between explanation, interpretation, and understanding.

Without explainable decision-making processes, radiologists face significant challenges in obtaining meaningful informed consent from patients regarding AI-influenced treatment recommendations.

that trust and acceptance of AI applications in medicine depend on multiple factors beyond technical performance, including transparency, fairness, and demonstrated clinical value.

The opacity inherent in multi-agent AI systems creates significant barriers to their adoption in clinical practice and compliance with professional standards. Healthcare professionals need a reasonable understanding of AI decision-making processes to maintain their clinical responsibilities and provide appropriate patient care. While explainable AI methods may work well for understanding single decision outputs, they face serious limitations when dealing with AI agents that carry out complex sequences of planning steps and actions based on detailed observational data. This basic constraint changes the relationship between clinicians and AI technology, shifting what should be a collaborative partnership into a troubling dependence on unclear systems.

The theoretical foundations for understanding these challenges have been carefully studied by researchers examining the complex nature of trust in medical AI applications. Trust in medical AI extends beyond performance metrics to integrate transparency, regulatory compliance, and clinical value (Gille et al., 2020). This broader view reveals why purely technological solutions to interpretability problems fall short. Technical approaches by themselves are insufficient to overcome the so-called “interpretability problem” (Gille et al., 2020). Providing the desired level of interpretability requires merging technical approaches in explainable AI with ethical and legal insights that address the subtle differences between explanation, interpretation, and understanding (Gille et al., 2020).

The practical consequences of these limitations appear directly in clinical settings. Without understandable decision-making processes, radiologists face considerable difficulties in obtaining meaningful informed consent from patients about AI-influenced treatment recommendations. Research consistently shows that trust and acceptance of AI applications in medical practice depend on multiple factors beyond technical performance, including transparency, fairness, and demonstrated clinical value.

Regulatory and Accountability Challenges in Healthcare AI

Multi-agent AI systems present new challenges for medical accountability and regulatory oversight. Current validation protocols and regulatory frameworks were designed for single-point decision systems with clear, transparent reasoning pathways—approaches that don’t work for collaborative AI agent networks. When multiple agents contribute to diagnostic decisions through complex interactions, determining responsibility for errors becomes exceptionally difficult. Gabriel and his colleagues have argued that a society inhabited by millions of autonomous AI agents will encounter both social and technological challenges, demanding proactive guidance and foresight (Gabriel et al., 2025).

The distributed nature of decision-making in multi-agent systems complicates the application of existing medical device regulations. These systems require new regulatory approaches that can address distributed accountability, dynamic decision-making processes, and arising behaviors that arise from agent interactions.

Continue Reading This Series

This introduction provides the foundation for understanding AI transparency challenges in radiology. Next, explore practical solutions in Part 2: Framework, Regulation & Future Directions, which covers implementation strategies, regulatory approaches, and future considerations for responsible AI adoption in clinical practice.

Written by

Sara Salehi, MD

Bradley J. Erickson, MD, PhD, CIIP, FSIIM

Yashbir Singh, PhD

Publish Date

Sep 9, 2025

Topic

- Artificial Intelligence

- Machine Learning

- Radiology

- Research

Resource Type

- Blog

Audience Type

- Clinician

- Developer

- Imaging IT

- Researcher/Scientist

- Vendor

Related Resources

Resource

SIIM Collaborates with The MarkeTech Group on AI Adoption Survey

Jan 12, 2026

In the early fall of 2025, SIIM partnered with The MarkeTech Group to explore how hospital-based imaging administrators are approaching AI adoption in radiology. The MarkeTech Group manages imagePRO™, a…

Resource

Teaching AI for Radiology Applications: A Multisociety-Recommended Syllabus from the AAPM, ACR, RSNA, and SIIM

Oct 1, 2025

In a groundbreaking collaboration led by the SIIM AI Education Subcommittee (formerly Machine Learning Education Subcommittee), the American Association of…

Resource

Multi-Agent AI and Ethics in Radiology: Navigating the Trust Crisis in Advanced Medical Systems

(Part 2)

Sep 9, 2025

Part 2: Framework, Regulation & Future Directions Additions Ethical Considerations in Medical AI Deployment The deployment of multi-agent AI systems…